Build system options in 2019

It's coming up to the end of 2019 and many people have various end of year meets. I was at an end of year lunch yesterday and somehow we got talking about editor configurations and the whole automatic formatting topic. As it turns out I'm a big fan of using auto-formatters for code since it allows you to save a whole lot of time and mental effort from being expended on silly little things.

One thing that came up from this was if these would correctly handle the tabs vs spaces distinction in Makefiles. As it turns out this tabs vs spaces thing is one of the things that I despise about the Makefile format since having important semantically differences represented in text that renders in a visually indistinguishable way is a poor design choice. Thankfully tooling exists that will parse and syntax highlight/format Makefiles correctly and this reduces that pain of that design mistake somewhat. But as I was explaining at the lunch this actually isn't my biggest issue with Makefiles and that's why I prefer some other options these days. Given that it's 2019 here's a list of some alternatives to Make that you may want to consider:

- Tup super fast

- SCons Python based and can be extended

- Waf

- Ninja useful if you are creating your own build system

But first a bit of backstory, I used to be a fan of Makefiles for build systems 2, it's very useful to have something that will perform some dependency resolution and only rebuild the parts of your project that require rebuilding. You can put one of these together quite quickly and that can reduce the friction of getting a build system up and running. When you have a lot of experience with big builds you'll very quickly come to appreciate that the build step itself can become an enormous time sink, you absolutely do not want to have to do this by hand. Automating this process is a huge win and Makefiles are right there for you if you want to do this. Since Make has been around since 1973 and GNU Make is packaged with many *nix and MacOS systems so there's no shortage of people who use it. This leads to a lot of tutorial code and resources that can help you get started.

The issues with Make mostly come up long after the toy problem stage, with production systems the problems with Make become very painful. The ease of getting started with Make and the early wins it can provide sets the stage for a situation of path dependence that leads to horrible Make build systems.

The main issue with Make is that various parts of the dependency resolution are actually broken in ways that impact performance and correctness.

Makefile incorrectness

So how did I go from being a staunch Make advocate to someone who was staunchly against it? A few years back I was working on the build system for a microelectronics project. The nature of this project meant that we had to cross-compile to get the binaries for various microcontroller target platforms (importantly this included various non x86 ones). There was also another requirement that this build system could be run in a cross platform environment. This quickly turned into a a nightmare due to cross platform issues (I could easily write a whole post about the pain that was developing cross platform things in the pre Windows subsystem for linux era where systems depended on POSIX-like components that weren't available on windows installs). Once the cross platform part was sorted out through much pain and suffering we had this build system that could cross compile, but it wasn't good. Perhaps we should have just used CMake for this from the beginning? Once we had put up with that initial pain in getting cross platform support we started to quickly find ourselves in this brutal sunk cost fallacy fueled path-dependence situation where we had this crappy build system but we'd spent a bunch of effort to get it to where it was at. We didn't even end up with a fast like-a-concorde system at the end of this for our sunken cost efforts 1.

Part of the pain came from the intrinsic nature of a large project that required cross-compilation, in a big project such at this organizing the build starts to get hard. There are factors here that remain hard whatever tooling you use. To get started I made use of a recursive make approach which at first seemed sufficient. I say seemed sufficient because tests were suspiciously failing when changes were made and when we looked into the compiled output and it turned out that various parts of the binaries were not being rebuilt when they were supposed to be. The change detection was faulty and in short we independently rediscovered what was talked about in the "Recursive make considered harmful" article, which is that recursive make will not reliably deal with dependencies.

Knowing that I needed to keep making progress I looked into what my options were and I started to investigate SCons as an alternative. This was however a microelectronics/IoT company and not a Python shop so I was a little hesitant to introduce this as a solution. It was however a feasible choice for this type of work, I seem to remember it being kinda slow at the time for their particular workload, but it produced correct binaries (my recollection of the performance factors of SCons are unreliable here, it's not like the glacial pace Make builds that were traumatically and permanently burned into my memory).

Due to various issues with the other build platforms I experimented with at the time we estimated that it would be better to adapt the make-based system we had than it would be to use a completely different system due to the time and effort it would take to research then implement. The nasty path-dependence came up again and kept us pushing on with what was looking like a worse and worse base with more and more layers of hacks applied on top of it. So I ended up rolling my own dependency resolution solution that got bolted on to the existing cross platform make that we had. This meant that we could have a system with sub-make files that would properly deal with the dependencies. In the process I ended up writing something a bit like Tup, except buggy, slower, harder to maintain and just generally awful. If someone in the future has to deal with this system, I'm sorry, but maybe you can take some comfort from the fact that this is just a terrible system that should be thrown in the bin, there's no secret-but-important Chesterton's fence reason why it exists, just kill it with fire.

Keeping using Make in this situation despite the incorrect dependency resolution was in retrospect a case where the sunk-cost fallacy impacted the decision hugely.

Makefile performance

While the redesigned non-recursive Make that I created was painfully slow it did end up working correctly. But the slowness was so severe that it started to impact in a very real way on developer productivity. I remember another option that was interesting at the time was Tup because it gets the dependency resolution correct and is fast.

Recursive make would sometimes take forever to figure out that it didn't want to make any changes, this was very annoying but ultimately wouldn't have been a deal breaker if it was the only problem. But it wasn't the only problem as the section about correctness above shows. The frustration of changing a single .c or .cpp file and waiting over a minute for the changes to be picked up before the compilation step ran was annoying enough to force us to look for alternatives.

Tup is extremely fast compared to make, there's some good benchmarks here that show how the performance of Make rapidly declines on large projects while Tup remains fast.

So why is Make slow and Tup fast?

There's a few reasons why Make is slow and why it's even slower on File Allocation Table (FAT) based file systems.

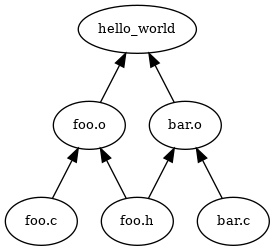

This picture from the Tup website shows quite clearly how Tup gets this right:

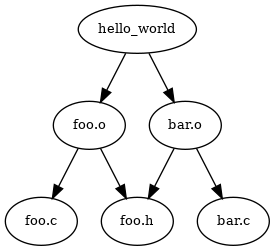

Compare this to Make:

You can see here that Make sometimes has to do the same check multiple times per file because of the way in which it deals with dependency resolution. In a situation where the directed acyclic build graph gets big the factor of duplicated work can get very large. The result can be massive slowdowns, especially in the case where the build system has to hit the disk to get information. This is a classic case of a poor algorithmic complexity, so it can keep creating slowdowns even with fast hardware. While upgrading to something like fast SSDs can make the issue a bit less severe, the performance problems can still occur in projects big enough due to this poor algorithmic performance resulting in a huge number of disk operations.

If you want to read more about the way in which Tup uses good algorithms to great affect you can see more details in the Tup Build System Rules and Algorithms (PDF) paper.

Specifically Make will check the various timestamps on files for the modification times. On FAT file systems this can be a slow operation, and if you have to do this on say 1k or 10k or 100k intermediate machine generated files this overhead can lead to a very significant bottleneck. If you have some "spinning rust" disks, then this process can be very very slow, across network drives it can be even more brutal.

When to use makefiles

Makefiles can be a decent choice where you have a few simple instructions that compose with each other and not-too-many dependencies. When the size of the DAG that is being represented in the Makefile is small enough to not run into the various shortcomings of Make it's an OK option. Take for example this site, it's being statically generated using a static site generator which takes a few commands as inputs and creates some simple outputs:

html:

$(PELICAN) $(INPUTDIR) -o $(OUTPUTDIR) -s $(CONFFILE) $(PELICANOPTS)

new: html live_folder

There's probably a other cases where using a Makefile make sense due to other constraints being present, just keep a very careful eye out for the tendency to end up in vastly suboptimal path-dependent situations.

-

The sunk cost fallacy is sometimes referred to as the "Concorde fallacy" since this was an example of a project which was facing really huge cost blowouts. Despite the escalation of cost blow outs there was growing support from some groups precisely because so much money had already been spent on the project and they wished to avoid the embarrassment of spending so much without delivering. ↩

-

For the most part on any non-toy project I will go out of my way to avoid Makefiles these days. The only makefile I regularly use is the one for building things with this website but if my needs get any more complex than running a few shell commands on a small number of files I imagine I'll retire this Makefile. At the moment the opportunity cost of changing to a different build system is the only thing that's keeping me using Makefiles on projects. ↩